This summer, EVL updated their classroom wall computer. Here is a short report about it (PDF version: SAGE2 on a 18-screen display with one PC ).

SAGE2 on a 18-screen display with one PC

CyberCommons

CyberCommons is the main class/meeting/brainstorm/VTC room at EVL. Developed since 2008, it has seen many iterations of technology over the years, but the goal remains the same: excerpt from EVL site

"A Cyber-Commons is a technology-enhanced meeting room on a university campus that supports local and distance collaboration and promotes group-oriented problem solving. It is a next-generation computer science resource that relies upon advanced networking and multiple high-definition (HD) displays to transform the traditional computer lab / classroom filled with terminals – to a work environment that facilitates and encourages group collaboration.

Envisioned by EVL director Jason Leigh and Computer Science faculty member Andy Johnson, the space is designed to connect students with advanced technologies and tools in virtual space with one another. The Cyber-Commons is a direct outgrowth of EVL's collaborative research focused on the development of technologies and tools for scientists linked via high-speed networks."

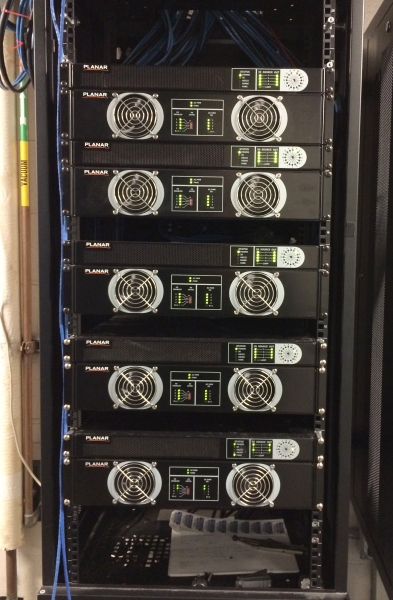

In late 2011, we switched the NEC LCD displays for 18 (6×3 layout) Clarity Matrix 46" from Planar (passive 3D, 1366×878) (Clarity Matrix). The LCDs stay on most of the day, every day to facilitate impromptu use.

Initially, the wall was driven by a large and noisy server-class PC with many Nvidia GPUs. For the last three years, it has been driven by a cluster of 6 display nodes (1U server with an Nvidia GPU, interconnected by 10G network) and a master node (used as file server).

When using SAGE, we relied heavily on streaming and powerful endpoints (GPU and 10Gbps networks). With the development of SAGE2 and lighter-weight technologies (client-side rendering in browser, cloud, javascript), we wanted to explore again the possibility to drive the wall with a single PC. The goal was to run Microsoft Windows, so it can be used for various class (SciVis, InfoVis, Gaming, Design, etc) and in various configurations (SAGE2, Unity3D, presentations and web pages).

Build

It took the summer to get through the hurdles of mixing new hardware and untested technologies with the existing wall of displays. Nothing really went easily, but we found a fix at every steps.

function BuildASAGE2Wall() {

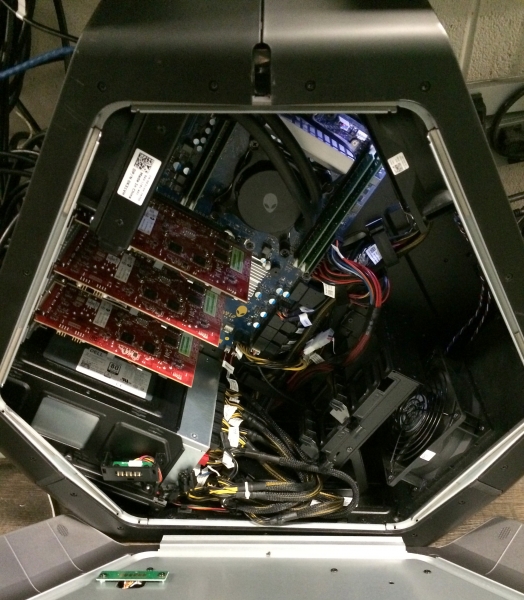

BuyComputer(); // Dell Alienware Area-51 gaming rig

AddGPUs(); // AMD FirePro W600

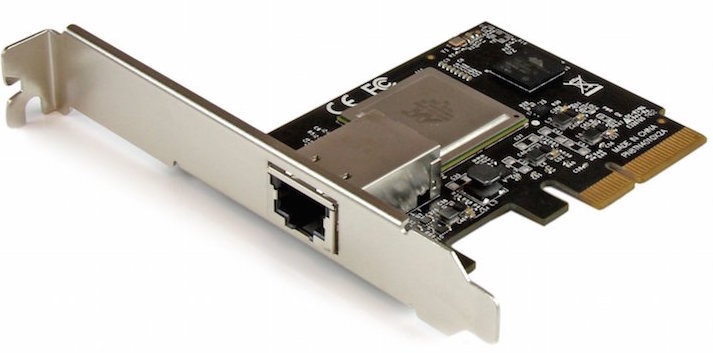

AddNIC(); // StarTech 10GBASE-T NIC

AddVideoAdapters(); // MiniDisplayPort to DVI

PlugVideoCables(); // DVI cables to Planer controller

PlugNetorkCable(); // Cat6 cable to 10G switch

UpdateOperatingSystem(); // Update Windows 8.1, upgrade to Windows 10

UpdateVideoController(); // Planar Controller Firmware

UpdateNetworkSwitch(); // Update Netgear 10G configuration

ConfigureWallLayout(); // Create AMD display groups

InstallSAGE2(); // Easy

Use(); // Good

}Finished product

The end product is a very large desktop running Windows 10 (8160px by 2304px, for ~ 18Mpixels).

The PC runs outside the room next to the video controllers, in the hallway. We use a wireless Bluetooth keyboard and mouse on the desk a few feet away from the wall. We plan to test a high-precision (high-dpi) wireless gaming mouse to see if it improves the navigation on such a large high-resolution display surface.

Running SAGE2

Once SAGE2 installed, the wall looks like this, a large uniform desktop for data sharing and collaboration.

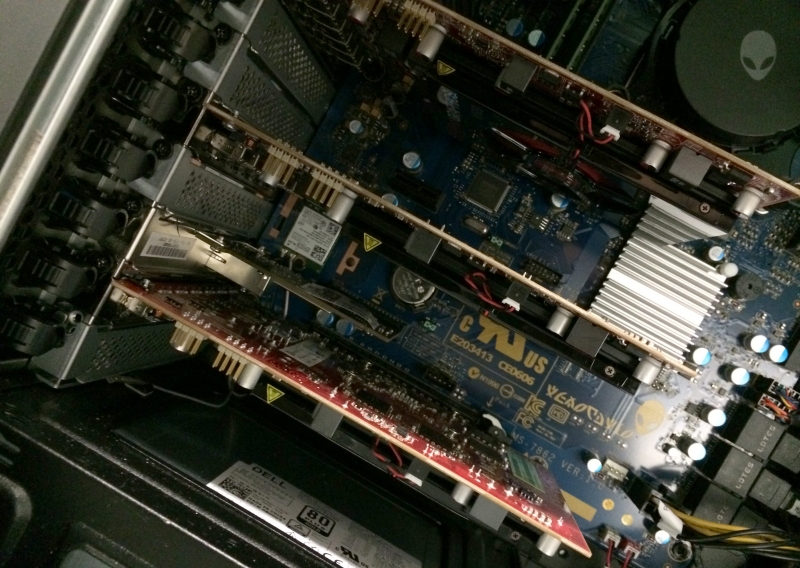

GPUs and Connectors

They are only a few ways to run 18 screens from a single computer. Even less if you consider a gaming class computer with only 3 PCI-express slots for GPU. We could run 3 gaming GPUs with 4 display outputs (i.e. Nvidia 9xx) but this would give us only 12 screens. To this day, only server class computer offer 4 or more PCI-express slots, making the system potentially much more expensive, larger, and louder. So we chose to use 3 AMD FirePro W600 GPUs, with 6 display outputs (mini-displayport 1.2 connectors, up to 4K resolution each). Each card costs ~ $500, is rather small (single-slot), pretty quiet and does not require extra power connectors (no extra PSU cable needed).

Various models exist for HDMI, DVI, and DP. Price ~ $20.

Configuration

Since Microsoft Windows 7, fullscreen applications only work across a single monitor (even if monitors are grouped into a desktop). Hardware vendors usually provide software solutions to overcome this and let user maximize an application across multiple monitors. This can be a combination of software, driver and hardware solution, with some limitations (for instance Quadro drivers on Nvidia Quadro cards, SLI or Surround gaming on Nvidia, Display Groups on AMD FirePro cards).

For performance and ease of use, it becomes important to select an appropriate layout of GPUs onto screens, given the expected use case scenarios.

To build a 18-screen display, various configurations were possible. The GPU outputs (6 per card) can be mapped to the main following display configurations:

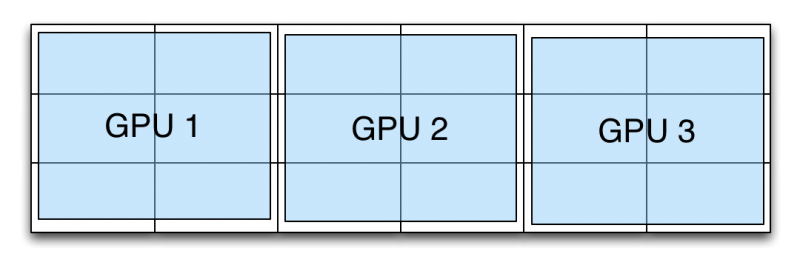

Layout A

Each GPU set in a 2×3 layout (2 columns, 3 rows): it provides a good locality for performance (for instance, an application on the center of the display will only run on one GPU).

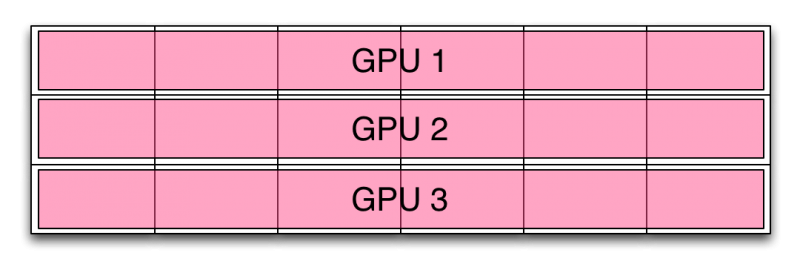

Layout B

Each GPU set in a 6×1 layout (6 columns, 1 row): our previous configuration running with a 6-node cluster and Matrox triple-head togo adapters, it offers poor locality.

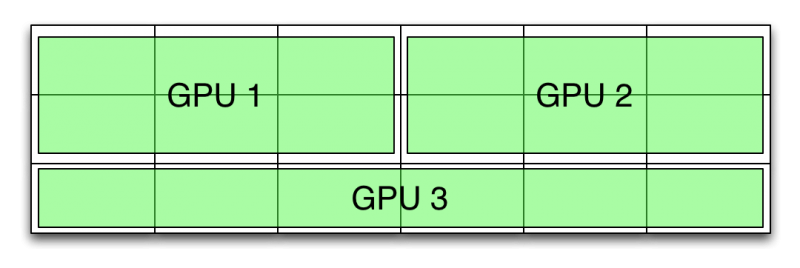

Layout C

Mixed configurations, two rectangles and one row: it provides a decent locality with two large groups. Native windows applications (i.e., web browser or Unity3d) will maximize to a large portion of the wall. Two applications can run side-by-side, each on a single GPU.

Final layout

We considered each approach and decided to choose 'Layout C'. In any case, a window can be resized manually to any size on the wall.

The sections are mapped to Display Groups using the AMD utility. Once the displays are configured into a group, they behave as a single monitor at the OS level, allowing for easy fullscreen configuration. However, a group cannot be created across multiple GPUs (only within a single GPU, 6 monitors at most).

Browsers

As described earlier, getting fullscreen applications in a modern Windows version is a struggle. Most web browsers offer a fullscreen mode (F11 on Firefox and Chrome), however the new Microsoft Edge does not yet at this time (August 2015).

To overcome the 'single monitor' limitation for fullscreen mode, we used a combination of software:

- Autohotkey:

- "Fast scriptable desktop automation with hotkeys"

- http://www.autohotkey.com/

- UltraMon

- "UltraMon is a utility for multi-monitor systems, designed to increase productivity and unlock the full potential of multiple monitors"

- http://www.realtimesoft.com/ultramon/Autohotkey lets us move and resize a window. Using script, one can search a window by name, query the system for its overall resolution and finally move and resize this window. This is very convenient with Firefox which can be resized while in fullscreen mode (neat trick).

UltraMon adds some extra capability to resize a window across multiple monitor using added button on the title bar of the application. For instance, we use this to maximize Google Chrome across the whole display (albeit still with window decoration and address bar).

Computer

As mentioned above, we wanted to stay in the gaming class of computer for this wall (mostly for price and noise). It's also easier to replicate for other non-visualization groups to replicate and to order than a custom server class computer. We settled on the Alienware Area-51, for around $3000 to $3,500 once configured with a decently fast Intel i7 processor, 32GB of RAM, an SSD drive for the operating system, and a powerful 1500-Watt power supply (in case, we use 3 powerful GPUs like Nvidia 9xx series).

The system can fit 3 double-width GPU if needed.

The PC is connected to a wired 1Gbps connection to the campus network for Internet access, and to 10Gbps research network for high-quality high-bandwidth streaming. We disabled wireless for now to get a more predictable network performance.

After the GPUs, the network card is one of the most demanding device. The usual 10Gbps network card we usually use (Intel Ethernet Server Adapter, such as X520 or X540) requires a x8 PCI-express slot. The Alienware Area 51 has the following mapping:

- Slot 1: PCI-Express x16 Gen 3

- Slot 2: PCI-Express x4 Gen 2

- Slot 3: PCI-Express x16 Gen 3

- Slot 4: PCI-Express x1 Gen 2

- Slot 5: PCI-Express x16 Gen 3

So we had to find a x4 network card. We tried and settled on the small Startech Gigabit Ethernet Network Card (ST10000SPEX) (containing a Tehuti networks TN9210 chip) which can be found below $200 online. Preliminary tests show a decent performance (~6 to 9 Gbps in send and receive direction, using nuttcp). More tests would be required to make a definitive decision.

All this leaves a x1 PCI-express slot free in the machine, maybe for something like a small HDMI capture card (Blackmagic DeckLink Mini Recorder, up to 1080p30 and 1080i60, for $140).

Display connections

Since we are using Planar display (46" 1366×768 pixels, 3D passive stereo), the video cables from the GPUs connect to the controller boxes driving the LCDs. We had many frustrating communications between the GPUs and the controllers. After communication with AMD and Planar, we realized we were running very old firmware on the controllers. After updating the boxes, it was mostly smooth. In the meantime, we had updated the Windows 8.1 operating system to Windows 10. AMD graphics drivers were available and installed without issues.

Control

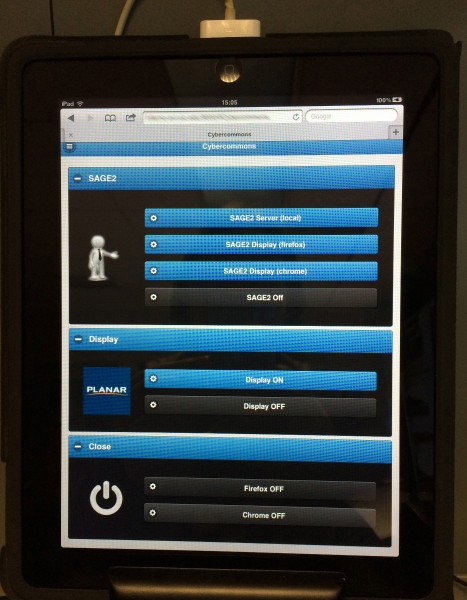

To control, this system we use again our 'sabi.js' tool: control your devices through a HTML5/Node/Javascript framework. This is especially important here since the room hosts many classes and meetings.

Since we are using Windows, remote operation is not easy: no builtin ssh or telnet operations. Powershell remote commands are executed in a separate session. Only psexec could be used but only from another Windows machine.

Sabi.js is implemented through a public web server (nodejs server with jquery-mobile interface). Tasks are implemented through commands, scripts, serialport or OSC operations. RPC to other Sabi.js server is also supported.

Sabi.js

- site: http://renambot.lakephoto.org/software/sabi-js/

- sources: https://bitbucket.org/renambot/sabi.js

Operations

The main operations configured are in single page:

- Starting the SAGE2 server,

- Launching Firefox as a display runtime,

- Launching Chrome as a display runtime,

- Stopping SAGE2 or the web browsers,

- Turning the screens on and off (serial-port communication to the Planar controller).

Audio and A/V

The Alienware Area-51 PC comes with an onboard sound card. We plugged the stereo output to the room sound system without any difficulty. The PC also supports multi-channel digital outputs if needed (toslink and coaxial connectors). Standard PC speakers should work out of the box.

For video conference, we leverage standard software (Skype or Hangouts). We use the following hardware through a USB extension cable and USB hub:

- Logitech C930e: webcam chosen for its quality and wide-angle lens

- ClearOne Chat150: echo-cancelling microphone and speaker (USB)

The ClearOne Chat150 should be used both as speaker and microphone for the echo-cancelling to work. The setup is really easy with USB (work on Linux, Mac and Windows) and works well for small groups, but seems to pick up ambient noise in Hangouts (loud HVAC noise in our classroom). This can be found for less than $300.

The Logitech C930e (similar to the popular C920) gives a wider angle of view than most webcams, with a good video quality and decent autofocus. Price is around $100.

We are still considering getting a more integrated unit such as the Logitech conference cam cc3000e. Functionally similar to the hardware we have, i.e. a USB microphone, speaker and camera, it adds PTZ (pan tilt zoom) capability, a remote and mounting hardware. This is basically a VTC unit operating through USB, and you can choose your favorite VTC software. This can be found for less than $800.

Usage

We are still getting used to the new wall, as the fall class schedule starts. Initial experience seems to indicate that it works decently as a display system for SAGE2 (decent 3D with WebGL) when the content stays within one display group. We are experiencing some slowdown when the SAGE2 server is also running on the Windows machine (still investigating this).

We are switching back and forth between Chrome and Firefox browsers: Firefox goes fullscreen with Autohotkey but sometimes goes all black, maybe a hardware rendering issue since the desktop is so large. Chrome works fine so far, but does not go fully fullscreen (keeping bookmark and address bars).

PQLabs (http://multitouch.com/) does not have a Windows10 driver, but we were told it was coming soon. So no touch enable interaction yet.

Costs

The new elements are:

- PC: $3,200 with Nvidia 980 GPU (not used here)

- AMD GPUs: 3x $540

- 10G NIC: $200

- DVI adapters: 18x $20

- DVI short cables: 18x $10

- Repurposed or existing: LCDs, controllers, keyboard, mouse, iPad, USB devices, …

- Total: $5,560

Contact

- SAGE2 site: http://sage2.sagecommons.org/

- SAGE2 forum: https://groups.google.com/forum/#!forum/sage2

- Direct contact: renambot at gmail dot com